Other Features

In prior chapters, we’ve discussed edge cases, which are usage patterns at the outside edge of what a typical user might do. Edge cases are the bane of software developers because they have to be accounted for, even though they occur infrequently. And sometimes the level of work that goes into handling an edge case will equal or exceed the level of work that goes into developing something that every user does, all the time.

One of the problems with writing complicated, feature-rich software is that any individual user of the software might only use 25% of its total features. But everyone uses a different 25%. Which means that beyond the basic, core functionality, everything becomes an edge case to some extent.

Once a platform’s user base hits a significant size, every edge case can be a huge problem. If 30,000 people are using your software, and a feature is used by only 1% of them, that’s still 300 people who expect it work with the same level of polish as everything else.

These users don’t know that this is an edge case. They don’t know that very few other people are using the feature. Just ask any vendor who has tried to remove a feature it didn’t think anyone was using, only to be greeted with howls of protest by a small subset of users who were depending on it.

In the previous four chapters, we’ve discussed the core features of web content management:

- Content modeling

- Content aggregation

- Editorial workflow

- Output management

It can be safely assumed that every implementation will use these four features to some degree or another. If a system falls down on one or more of these, it’s tough to get much done.

However, in this chapter, we’re going to talk about features on the edges. These are things that might be used in some implementations, and not in others. Some systems won’t implement some of these features, because they haven’t had a user base demanding them.

Features that address use cases on the edges often have a “backwater” feel to them. They’re somewhere off the mainstream, and might offer functionality that even experienced integrators (or members of the product’s development team itself!) have forgotten exists, or never knew about in the first place.

Backwater features are those that don’t get nearly the attention of the core features and are sometimes created just so a vendor can check few boxes on an RFP and say it offers them. Consequently, their value tends to fall into narrow usage patterns.

Note that not everything discussed in this chapter is a “backwater feature,” but all the features we’ll look at here represent functionality that might not be present in all systems.

Multiple Language Handling

Content localization is a deep, rich topic. Technical documentation writers have been developing methods to manage multiple translations of their content for decades. Websites have just made the problem more immediate and more granular

Having your website content in more than one language is often not a binary question, but rather one of degrees. In some cases, you might simply have two versions of your website, with all content translated into both languages. In others, however, you might have some content in your primary language

Multiple languages add an additional dimension to your CMS. In terms of your content model, each attribute may or may not require additional versions of itself. The Title attribute of your News Release content type clearly has to exist in multiple versions, one for each language, but other attributes won’t be translated. The checkbox for Show Right Sidebar, for instance, is universal. To account for optional translation when modeling content, you might be required to indicate whether a particular attribute is “translatable” or not.

The end result is not a simple duplication of a content type, but rather a more complex selective duplication of individual content attributes. Content objects are populated from the required combination of universal attributes, translated attributes in the correct language, and “fallback” translations for attributes without the correct language.

Nomenclature

When discussing multilanguage content, some terms can be tricky. Here are a few definitions to keep in mind:

- Localization is the translation of content into another language.

- Internationalization is the development of software in such a way that it supports localization.

For example, a CMS that is “internationalized” has been created in such a way that multiple languages can be supported, in either the content under management or the CMS interface itself.

That last point is worth noting: there’s a difference between a CMS that manages content in multiple languages and one that presents itself in multiple languages. If your Chinese translator doesn’t speak English (let’s assume she’s translating content from, say, Swedish), having a CMS interface that can only be presented in English can be a problem.

Language Detection and Selection

The language in which your visitor wants to consume content can be communicated via three common methods:

- The domain name from which the content is accessed

- A URL segment of the local path to the content

- The visitor’s browser preferences, communicated via HTTP header

In the first case, the content is delivered under a completely separate domain or subdomain – for example:

www.mywebsite.sese.mywebsite.com

The first example requires that your organization own the country-specific domain name. This might be difficult if a country puts restrictions on who can purchase those domains (you may have to be incorporated or based in the country in question, for example). The second example uses a subdomain, which is available at no cost to the organization that owns the “mywebsite.com” domain.

An additional consideration is that “mywebsite.se” is considered to “belong” to a country, and there are rumors of more search engine optimization (SEO) consideration when using search engines in those countries. In this instance, content served under the “mywebsite.se” domain might perform better when using http://google.se[Google Sweden].

The ability to map languages by domain name may or may not be supported by a CMS. In some cases, the alternative domain would be considered another website, while others will allow the mapping of multiple domains to a single site for the purpose of mapping languages to specific domains.

If you don’t want to change domain names, many systems will allow for the first URL segment to indicate language. For example:

www.mywebsite.com/se/my-news-release

In this case, the “se” at the beginning of the local path is detected and Swedish-language content is served. In systems where URLs are automatically generated based on site structure, this URL segment is usually added automatically, is transparent to the editor, and will not map to any particular content object. (For now we’ll ignore the fact that the remainder of the URL should actually read “mitt-pressmeddelande”; we’ll talk more about that in the next section.)

Finally, many systems support automatic detection of the language preference from the inbound request. Hidden in web requests are pieces of information called “headers” that your browser sends to indicate preferences. One such header looks like this:

Accept-Language: se, en;q=0.9, fr;q=0.8

In this case, the user’s browser is saying, “Send me content in Swedish, if you have it. If not, I’ll take English. If not that, then use French as a last resort”

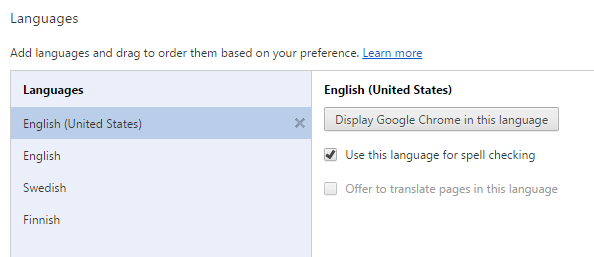

This language preference is built into your browser, and can be modified in the browser settings (you may have to look deeply because it’s rarely changed, but I promise you it’s in there somewhere) – see the image below for an example. For most users, this is set (and then never changed) by your operating system based on the version you purchased. If you bought your copy of Windows in Sweden, then the operating system language likely defaulted to Swedish, and Internet Explorer will be automatically set to transmit an +Accept-Language+ header preferring Swedish.

The language settings dialog in Chrome – these settings resulted in a header of Accept-Language: en-US,en;q=0.8,sv;q=0.6,fi;q=0.4

Language Rules

What if a user selects a language for which you do not have content? Your site might only offer its content in English and Swedish. What do you do with a browser requesting Finnish?

First, you need to understand that the information in the Accept-Language header, domain name, or URL segment is simply a request. The user is asking for content in that language. Clearly, you can’t serve a language you don’t have. Nothing is required to break in these cases; you just need to make choices regarding how you want to handle things.

You have a few options:

- Return a “404 Not Found,” which tells the visitor that you don’t have the content

. - Serve content in the default language, perhaps with a notice that the content doesn’t exist in the language the user requested. In this case, we would serve English and perhaps explain to the user that Swedish wasn’t available by putting a notice at the top of the page.

- Fall back according to a set of rules. You could, for example, configure the system to treat Swedish and Finnish the same, so users requesting Finnish content would receive content in Swedish. Clearly, these are different languages, but many Finns also speak Swedish, which would at least get you into the right ballpark

.

Assuming the content does exist in the language the user requested, how do you handle requests for specific-language content when rendering the rest of the page, especially the content in the surround? If you have 100% of the content translated, then this isn’t a problem, but what if the site is only partially translated?

In most cases, a CMS will require the specification of a “default” language (English, in our example), in which all of the content exists. This is the base language, and any other language is assumed to be a translation of content in the default language. This might mean, for some systems, that content cannot exist in another language if it doesn’t exist in the default language first

In a partially translated site, there might be content translations for some of the content in other languages. If someone requests one of these languages (Swedish, as an example), and you can manage to produce the desired content in that language, you still have the problem of rendering other content on the page – the navigation menu, for example – when parts of it may require content that has not been translated.

You have two options here:

- Simply remove any untranslated content.

- Display the content according to fallback rules.

With the first option, the site might shrink, sometimes considerably. If only 30% of the site is translated into Swedish, then the navigation will be considerably smaller. In the second example, all content will be available, but some links will be in Swedish and others in English (our default).

Language Variants

There may also be specific dialects of a language per country. In these cases, in accordance with ISO-639, the language codes are hyphenated. For instance, “fr-ca” is for “French as spoken in Canada,” and “en-nz” is for “English as spoken in New Zealand.”

Some countries mandate differentiation. For example, Norway has two official written and spoken language variants:

- Bokmål:

no-nb, the official variant - Nynorsk:

no-nn, literally “new Norwegian”

All governmental branches are required by law to publish/transmit 25% of their content in Nynorsk. In these cases, fallback rules are common. For the 75% of content that doesn’t have to be in Nynorsk, a request for content in “no-nn” would likely be configured to fall back to “no-nb”.

Beyond Text

While text is the content most commonly affected by localization, it’s not the only content:

- Images and other media might have multiple translations, based on language or culture. It’s easy to inadvertently offend visitors from some countries by showing images outside their cultural norms. For example, for many years, it was common to avoid showing images of families with more than one child when delivering Chinese content. Similarly, in predominantly Muslim countries, the Red Cross is known as the Red Crescent, with a different logo to match the name

- URLs might be specific per language, as we noted earlier. This results in the additional complication that content can no longer be translated into a different language simply by changing the language indicator (whether this be the domain name, a URL segment, or a request header), since the actual path to the content in another language is different. If someone changes the “se” language indicator to “en,” they might get a 404 Not Found because “/en/min-nyheter-releasen” is not a valid path, even though that content does exist in English under another path (“/en/my-news-release”).

- Template text will need to be changed. The word “Search” on an HTML button is often not content-managed but is baked into the template. It will need to be rendered as “Sök” when accompanying Swedish content. Depending on the platform and templating language, these text snippets are often stored in resource files managed alongside templates and then retrieved and included at render time.

- Template formatting is often culture-specific. For example, “$1,000.00” in English is “$1.000,00” in Italian and “$1 000,00” in Swedish. Clearly, the “$” is problematic too, but changing it to the symbol for the euro or krona fundamentally changes the value. This formatting information is often available as a template setting, though this setting will need to be detected and set by the template developer

. - Template structure sometimes has to change based on the structural characteristics of the language. French, for example, takes up 30% more space than English on average. German is notoriously long as well

. Additionally, some languages are read right-to-left (Hebrew and Arabic, for example), and others vertically (multiple East Asian languages). These languages can require major template changes, and sometimes even a specific template set all their own.

Editorial Workflow and Interface Support

Content translation and language management is fundamentally an editorial concern, so the editorial interface and tools can provide functions to assist:

- In many cases, the editorial interface of a CMS can be configured to provide side-by-side presentation of content that needs to be translated. This allows a translator to view the content in one language while simultaneously rewriting the content in another, on the same screen.

- Editing languages can be limited by permissions to ensure that a monolingual English speaker doesn’t try to “wing it” and make a change to the Swedish version of a page.

- Translations might be lockable and grouped with the default language so new content can’t be published until translations in all other required languages have been added or updated.

- Many systems come with preconfigured workflows and user groups for adding additional translations. User groups can be language-specific, and beginning a workflow for a specific group can automatically add a new translation and route a task to a qualified translator.

- Rich text editors have varying support for multiple languages, especially non-Germanic languages such as Arabic and Japanese. Symbol insertion might be required to add language-specific characters for users not possessing the correct keyboard mappings. An “O” is not an “Ø,” and if you don’t have this on your keyboard, you’re going to need to find a way to insert it.

- Links from one content object to another might need to be language-specific. In some cases, you might want to link to a specific piece of content in a specific language. By default, links will likely target the same language as the one used on the page on which they appear (Swedish to Swedish, for example), but if the linked-to content is not available in that language, another language will need to be selected.

External Translation Service Support

Many organizations provide commercial translation support, and your CMS might be able to communicate with the translation service directly through automation.

XLIFF (XML Localisation Interchange File Format; commonly pronounced “ex-lif”) is an OASIS-standard XML specification for the transmission of content for translation. Content can be converted to XLIFF and delivered to a translation firm, and the same file will be returned with the translated content included. Many systems will allow direct import of this content, adding the provided language translation (or updating an existing version).

Taking this a step further, many translation vendors offer plug-ins for common CMS platforms that allow you to initiate an external translation workflow that automatically transmits content to the translation service. The content is translated and returned to the CMS, and the content object is updated and either published automatically or presented to an editor for review. Some of these plug-ins are provided at no cost as an enticement to use the translation service that offers them.

Personalization, Analytics, and Marketing Automation

In the current competitive business climate, many organizations are not buying a content management system so much as they’re buying a content marketing system. To explain why requires a little history.

Some time in the last decade, CMS vendors suddenly caught up to customers. For years, customers had been clamoring for more and more editorial and management features, and the vendors were constantly playing catch-up. However, there suddenly came a point where vendors reached rough parity with what editors were asking for, and were finally providing most of the functionality that editors and content managers wanted.

Continually trying to differentiate themselves from the competition, the commercial vendors looked for another audience with unmet needs, and they found the marketers. From there, the race was on to bundle more and more marketing functionality into their CMSs.

The content marketing industry had been around for years – companies like Adobe, HubSpot, eTrigue, and Exact Target had long been providing content targeting and personalization services – but CMS vendors poured into that market. The link between content management and marketing was obvious, so the commercial vendors tried to strengthen their offerings with these tools.

The result has been marketing toolkits of varying functionality and applicability, built on top of and often bundled with a CMS (or available as an add-on or module at additional fee

Anonymous Personalization

A goal of any marketing-focused website is to adapt to each visitor individually. In theory, a single website could change in response to information about the visitor, and provide content and functionality that particular visitor needs at that particular moment, in an effort to more effectively prompt visitors to take action.

This functionality is collectively known as “personalization.” Two very distinct types exist:

- Known personalization, where the identity of the visitor is definitively known over time. Clearly, this only works when visitors proactively identify themselves, usually by logging in. Thus, each user has a permanent identity with the website, and actions and preferences can be tracked and stored for that user specifically. The users might have a “control panel” or other interface where they can modify their own settings.

- Anonymous personalization, where the visitor is not known to the website. In these cases, the website has to deduce information about the identity or demographic group of the visitor from clues provided through behavior or other information like geolocation, referring website, or even time of day.

Known personalization has been available for years. In these cases, users clearly know that the website has their identity, and often expect to be able to modify how the website interacts with them. The New York Times website, for example, has an entire interface devoted to allowing subscribing users to select their preferred news sections, and this information pervades their interaction with the service (even beyond the website, extending to the New York Times mobile applications).

But anonymous personalization has been the big trend of the last half-decade

The first step of anonymous personalization requires marketers to create demographic groups into which they can segment visitors. Creating these groups requires the identification and configuration of multiple criteria to evaluate the visitor against. The criteria fall into three general types:

- Session: Factors specific to the visitor’s current session (e.g., location, incoming search terms, the technical parameters of the web request, etc.)

- Global: Factors related to external criteria and universal to all visitors and sessions (e.g., time of day, available content, etc.)

- Behavior: Factors related to the accumulated content that a specific visitor has consumed and the actions a visitor has taken on the website (usually in the current session, but optionally including previous sessions as well), with the assumption that users’ selection of this content contributes to their audience identification

For example, for a travel agency website, we could decide to apply extra marketing to our Caribbean travel packages by targeting visitors who might be feeling the effects of winter. To do this, we’ll identify a demographic group we’ll call Icebox Inhabitants. Our criteria for this group are:

- The visitor’s location must be north of the 40th latitude, or the current temperature in their location must be less than 50 degrees Fahrenheit

. - The current date must be between October 15 and March 15.

- The visitor must have viewed at least one vacation package to a location we have classified as “Warm Weather.”

Anyone falling into this group is “tagged” for their browsing session as an Icebox Inhabitant.

The second step of personalization is to use the information we’ve gained about the user to modify the site and its content in such a way as to elicit a desired reaction from the user. Some options provided by CMSs that have these capabilities include:

- Changing content elements, such as adding a promotional element in the sidebar, based on group membership

- Showing, hiding, or changing specific paragraphs of text or images inside rich text areas

- Template-level or API-level changes to alter rendering logic or other functionality

- Redirection, allowing the substitution of different content for specific URLs

- Modification of site navigation, by showing or hiding options from different demographic groups

Building on our previous example, we could include a custom promotional element in the sidebar for Icebox Inhabitants highlighting the temperature in Bora Bora and our fantastic vacation packages there. Additionally, we could load a supplemental stylesheet to incrementally change the color palette of the website to warmer colors.

Implemented at a practical level, this allows a marketer to highlight relevant content for users who they think will react to it. At its most absurd extreme, this would allow the management of smaller, individual content elements that are then combined dynamically at request time to render a one-off, bespoke website for each and every user

Clearly, with great power comes great responsibility. It’s quite easy to introduce usability problems by changing a website’s structure or content in real time. If a user has viewed personalized content and sends the URL to a friend, that friend might not see the same thing when he visits. The original user might not even see the same thing the next time she visits the site, or even the second time she navigates to the same page in the same session.

This also raises the question of how to handle search engine indexing. When the Googlebot (or even the site’s own indexer) visits the site, what content does it index? Do you leave it to the default, nonpersonalized content, or do you create a personalization group specifically for search engine indexers and display all the content, in an attempt to have as much indexed as possible? But then what happens when a page is returned by a Google search based on content that isn’t present unless the user’s behavior has put him into a specific personalization group?

Personalization is an exciting feature, certainly but it does call into question one of the core principles of the World Wide Web: content is singularly identified by a URL. On a heavily personalized site, a URL is really just the “suggestion” of content. The actual content delivered in response to a URL can be highly variable.

Analytics Integration

Website analytics systems are not new, but there was a push some years ago to begin including this functionality inside the CMS. The result was analytics packages that were not providing much in the way of new functionality, but were simply offering it inside the CMS interface.

The key question is: what new functionality does integrating with a CMS enable? The answer, seemingly, is not much. Analytics is mainly based on two things:

- The inbound request itself

- Events hooked to activity happening when the page is loaded

The inbound request is usually captured before the CMS has even come into play, and client-side events are tracked using code developed during templating. Given this, the value of analytics integration is questionable.

The trend in the years since then has been to integrate analytics packages from other vendors, the most common being Google Analytics. Many systems now offer the ability to connect to a designated Google Analytics account for the site the CMS manages and show that information inside the interface, mapped to the content itself.

Editors might be able to view a piece of content in the CMS, then move to a different tab or sidebar widget inside the same interface to view analytics information on that content specifically.

With the personalization functionality described in the last section, some analytics reporting might have value in terms of reporting how many visitors fulfilled the criteria for a specific demographic group. This would give editors some idea of how common or rare a particular combination of visitors is.

Marketing Automation and CRM Integration

Beyond the immediate marketing role of the website, there’s a larger field of functionality called “marketing automation” that seeks to unify the marketing efforts of an organization through multiple channels. This is what’s being used when you get a series of emails from a company, click on a link in one (with a suspiciously long and unique URL), and that action seemingly exposes you to a new round of marketing geared specifically to that subject.

Clearly, your actions are being tracked across multiple platforms, with all your actions feeding a centralized profile based on you in the vendor’s customer relationship management (CRM) system.

Many CMSs offer integrations with CRM or marketing automation platforms. Integration goes in two different directions:

- The CMS might include tracking data in links, or otherwise report back to the CRM on actions that known users are taking on the website. In this sense, the CMS “spies” on the users and reports their activity back to a central location.

- The CMS might offer CRM demographic groups as personalization groups or criteria, allowing editors to more easily customize content for groups of users already created and represented inside their CRM.

Some CMS vendors have offered creeping functionality in this space, with the CMS incorporating more and more CRM and marketing automation features into the core. Some go so far as to offer email campaign management directly out of the CMS, complete with link and click tracking, and even direct customer management.

However, as marketing automation vendors such as HubSpot, Marketo, Pardot, and others have become more and more sophisticated, the industry is realizing that pre-built integrations with those systems are more likely to win customers. Thus, the marketing automation vendors are building integrations between their systems and CMS vendors in an effort to present a unified platform that provides a more desirable product on both sides.

Form Building

Content management is usually about content output; however, most systems have some methods for handling content intake, via the generation of forms.

When creating forms, an editor has two main areas of concern:

- Generating the form interface

- Handling the form data once it’s submitted

In both cases, the range of possible functionality is wide, and edge cases abound. The market does well at supporting the mainstream use cases, but cases on the edges are often ignored or poorly implemented. The result is usually systems that work for simple data collection, but feel constraining for power editors trying to push the envelope.

Form building in CMSs drives significant overlap between editors and developers. The line between a simple data intake form and a data-driven application can become blurry. Editors might think that form building gives them the ability to do complicated things with data intake and processing, when rarely is that actually true.

Form Editing Interfaces

Editors use two main styles of system to create forms:

- A simple form editor, which allows the insertion and configuration of form fields to allow for content intake.

- A type of “reverse content management,” where content to be collected is modeled as a content type and the interface presented to the user is, in effect, “reversed,” with the user seeing the edit/creation interface, rather than the output. Unknowingly, users are creating managed content objects with their form input. (e.g., we might create a content type for “Contact Us Data,” and the visitor would see the creation form for that and would actually be creating a content object from the type by completing the form).

The former is vastly more common than the latter, and quite a bit more useful. Generation of input forms is usually an editorial task, while content modeling is a developer task. Expecting editors to model content to represent intake from visitors might be too much to ask.

Form editors operate in varying levels of structure:

- A minority are based on rich text editors that allow the free-form insertion of form fields like any other HTML-based element. These are very flexible, allowing for the creation of highly designed forms. Form fields are simply placed alongside information, such their labels and help text, like any other rich, designed content.

- Most editors, however, are structured, meaning users are walked through the process of adding form fields with their accompanying labels and help text. The fields are then rendered in sequence, via a template.

In the latter (and far more common) case, editors can “Add a Form Field” and specify information similar to the following:

- Field type (text box, multiline text box, date picker, drop-down list, checkbox, etc.)

- Field name/label

- Help or additional text

- Validation rules

- Error messages

- Default value

These fields are ordered, then generated in a templated format. This usually generates clean forms that comply with style guidelines, but editors can find it constraining from a design perspective. For example, seemingly simple needs like having two fields stacked next to each other horizontally might not be supported (vertical stacking is a common restriction with form rendering).

Whenever you’re dealing with user-generated content, edge cases and the sanitizing/validation of data become concerns. As we discussed in Content Modeling, the possible requirements for data formats – and ways for users to circumvent and otherwise abuse them – are almost infinite.

Here are some common validation specifications:

- The input is required.

- The input must match a specified format (numeric, a certain number of digits, or a regex pattern).

- The date input (or numeric input) must be within a specified range.

- The input must be from a specified list of options.

(Does this sound like content modeling? It should. You’re essentially modeling the intake of data. This is reification at its most basic level.)

Three additional areas of functionality are commonly requested by editors, but poorly supported in the market. They are:

- Conditional fields, which display, hide, or change their selection options based on prior input. The classic example is two drop-down menus, where the options of the second change based on the selection made in the first (e.g., selecting a car manufacturer in one drop-down changes the second drop-down to list all the models offered by that manufacturer).

- Multipart forms, which allow users to complete one section of a form, then somehow move to a second section that adds to the data collected by the first. Even more complex, the sections might be conditional, so that the values selected in the first section will dictate what options are offered in subsequent sections (or whether subsequent sections are offered at all).

- Form elements configured by content, where the options offered in drop-down menus, radio buttons, or checkbox lists are driven by content data. For example, a class sign-up form might show a list of classes pulled from content objects stored in the CMS.

These options again bring into focus (or blur further) the line between editorial and developer control. At what point does the complexity of a form cross over from something an editor can handle to something a developer must implement? The line is not clear, but it is quite firm – editors usually don’t know where the edges are until they stumble on a requirement that cannot be implemented.

Form Data Handling

Once a form collects data and validates it, a decision needs to be made on what to do with it. Common options include:

- The data can be emailed to a specified set of addresses.

- The data can be stored in the CMS for retrieval, viewing, and exporting.

- The data can be sent to a specified URL, usually as an HTTP POST request or, less commonly, or as a web service payload.

Most systems will allow you to select the first two in parallel.

The third option, while seemingly offering limitless integration possibilities, again causes the form creation and management process to become bifurcated between editors and developers. While an editor can create a form and send the data to a web service that has been developed for it, this limits the value of creating the form editorially in the first place. The web service is likely expecting the data in a specific format, and if an editor changes the form and the resulting data it transmits, there’s a good chance that the web service will not function correctly without a developer having to modify it.

The best advice for working with form builders and handlers might be to simply lower your expectations. Your goal should be simple data collection and handling and not much more. Too many editors assume form builders will allow them to create applications or otherwise play a part in complex enterprise data integration without any developer oversight or assistance. This is an unreasonable assumption and always leads to unmet expectations.

Simple data collection is quite possible, but an application development platform that completely removes the need for custom development in the future is just not an expectation that can reasonably be met.

URL Management

In the early days of CMSs, content URLs were commonly “ugly” and betrayed the internal working of the system. For example:

/articles/two_column_layout.asp?article_id=354

This was in opposition to “friendly” or “pretty” URLs that looked like they were manually crafted from file and folder names, and which imparted some semantic

/articles/2015/05/politics/currency-crisis-in-china

Today, it’s quite rare to find a CMS that doesn’t implement some method of semantic URLs. In the case of systems with a content tree (discussed in Content Aggregation), these URLs are usually formed by assigning a segment to each content object, then aggregating the segments to form the complete URL (and perhaps adding a language indicator to the beginning).

So, if your tree looked liked this:

- Articles (segment: “articles”)

- 2015 (“2015”)

- May (“05”)

- Politics (“politics”)

- The Currency Crisis in China (“currency-crisis-in-china”)

It would result in the URL displayed second.

Other systems without a content tree invariably have some logic for forming URLs, whether by content type, menu position, or folder location. It’s rare to find a CMS that doesn’t account for semantic URLs in some form.

In most systems, the URL segment for a particular content object is automatically formed based on the name or title of the object, but is also editable both manually and from code. An editor might manually change the URL segment for some reason, and a developer might write code to change it based on other factors (to insert the date to ensure uniqueness, for example).

Forming the URL based on a content object’s position in the geography is convenient, efficient, and most of the time results in a correct URL (or at least one that isn’t objectionable). However, the “tyranny of the tree” applies here as well – the URL is formed by the tree, but an object might be in some position in the tree for reasons other than the URL, which makes it problematical to form the URL from its position.

For example, our news article example might have been organized in that particular manner (under a year object, then a month object, then a subject object) for convenience in locating content administratively, or for other reasons related to permissions or template selection. However, this organization forces a URL structure as a byproduct, and what if you want something different? For example:

/articles/currency-crisis-in-china-0515

In this case, for whatever reason, you want the title of the article to form most of the URL, with the year and month appended to the end. Effectively, the year and month need to be “silent” in the URL, and you need to adjust the article’s specific URL segment to add the date. Some systems will allow for this, and some won’t.

Historical URLs, Vanity URLs, and Custom Redirects

URLs are part of the permanent record of the Internet. They are indexed by search engines, sent in emails, posted to social media, and bookmarked by users. So, changing a URL might introduce broken links. Additionally, when the URL is formed by the tree, changing the URL segment of an object “high” on the tree will necessarily change all the URLs for the content below it, which might amount to thousands of pages. Carelessness can be catastrophic in these situations.

Some systems will account for this by storing historical URLs for objects, so if an object’s URL changes, the system will remember the old URL and can automatically redirect a request for it. Other systems won’t do this, and this functionality has to be added manually.

Editors might also want to provide a completely alternate URL for a content object – for example, a shorter URL to use for other media (print or signage), or a URL with marketing significance for content deep in the site that might have a less advantageous URL naturally.

For example:

www.mywebsite.com/free-checking

www.mywebsite.com/signup

In these cases, an alternate URL can sometimes be provided that either produces the content directly, or redirects the user to the content. If the former, the content might still be available under the natural URL as well, which raises the question of which URL the site itself uses when referencing the content in navigation

In addition to reasons of vanity, editors might want alternate URLs for their content to account for vocabulary changes. For example, when the name of your product has changed, and the old name is in 100 different URLs, this presents a marketing problem. Other situations might be to continue to provide access to content after a site migration. In these cases, a series of alternate URLs for a content object might be required in order to provide for continuity.

Some systems will allow for storage of alternate URLs with content, while others might provide an interface to maintain data that maps old URLs to new URLs. Some systems might redirect automatically in the event of a 404, while other systems will have to wire up these redirects manually, usually by including lookup and redirection code in the execution of the 404 page itself. (This means the code only executes and redirects in the event of an old URL access that would otherwise return a 404.)

Multisite Management

If you want to deploy a second website using a CMS that supports multiple sites, you can choose between two solutions:

- Stand up a completely separate instance of the software (on the same server, or even on another server). The new instance of the CMS in question will have no knowledge of, or relation to, the existing website.

- Host the second website inside the existing instance of the CMS. This website will have a more intimate knowledge of the first website, and might be able to share content and assets with it.

Hosting more than one website in the same CMS instance can, in theory, reduce your management and development costs by sharing data between the two websites. Items that are often shared include:

- Content objects, such as images or other editorial elements. Your two websites might share the same privacy policy, for example, or display the same news releases.

- Users, either editorial or visitors. The same editors might be working on content in both sites, and users might expect the same credentials to work across sites.

- Code, including backend integration code and templating code. The sites might share functionality, and the ability to develop it for one site and reuse it on another can be a significant advantage.

However, it’s hard to generalize about whether or not this is advantageous, because two sites in the same CMS instance might have a wide range of relationships. In some situations, sharing is an advantage, while in others it’s a liability.

On one extreme, the second site might just be a reskinning of the first. It might have the exact same content and architecture, just branded in a slightly different way

On the other end of the scale, the second site might be for an entirely different organization (perhaps you’re a third party providing SaaS-like CMS hosting). In this case, sharing the same CMS instance is likely to be more trouble than it’s worth since preventing the sharing of editors, content, and code will be far more important than sharing any of it, and will require policing and increased code complexity.

Somewhere in the middle is the most common scenario: the second site is for the same organization, so sharing editors is beneficial, and the second site requires some of the content of the main site, which can also be helpful. But the second site will also bring a lot of unique content, functionality, and formatting, to the point that sharing code and templating is not feasible. This is becoming more common as marketing departments support larger campaigns with individual microsites that are intentionally quite different from the main site in terms of style and format.

Additionally, the second site might need content modeling changes, so sharing content types will be difficult. For instance, if your microsite has a right sidebar on its pages (and the main site does not), how do you handle that? Do you add a Right Sidebar attribute to the Text Page content type for the entire installation, and just ensure that editors of the main site know that it doesn’t apply to them? Or do you create a new content type for the microsite, and suffer through the added complexity of maintaining both Main Site Text Page and Microsite Text Page content types? What happens when the next microsite needs to launch with another slightly different content model?

The resulting confusion can make multisite management difficult. The core question comes back to what level of sharing between the two sites is advantageous, and how the CMS makes this easier or more difficult. Organizations have been known to force through a multisite CMS installation on dogmatic principle (“We should be able to do this!”) when simply setting up another site instance would have been less work and resulted in a better experience for both editors and users.

Reporting Tools and Dashboards

Two things that content editors and managers are consistently looking for are control and peace of mind. Many CMSs are implemented because the organization is unsure of how much content it has, and what condition that content is in. There’s a distinct lack of clarity in most organizations about their content, and the metaphor of “getting our arms around our content” comes up often.

For these reasons, simple reporting goes a long way. Editors and content managers love to see reports that give them an overhead view of their content. For example, many organizations would like to simply see a list of all the image files in their CMSs not being used by any content that can be safely deleted.

Reporting tends to be glossed over by vendors for two reasons:

- Like all editorial tools, it affects a smaller audience of people (you have fewer editors than visitors), so it can fade in importance compared to more public-facing functionality.

- Developing reporting tools can be frustrating because there are an infinite number of reports that an editor or manager could request. It’s virtually impossible to predict what someone might want, so it becomes a huge bundle of edge cases.

Many systems offer reporting dashboards or tools to provide insight into content residing in the system. These systems will usually come with a set of preconfigured reports for common reporting needs. Some examples are:

- Content in draft

- Content scheduled for publication

- Expired content

- Content with broken hyperlinks

- Pending workflow tasks assigned to you

- Workflow states that have been pending for longer than a specified time period

While this is certainly valuable information, no system can anticipate the level of reporting required by any individual user. All it takes is one editor to say, “Well, I really just want to see articles in the politics section that are in draft, not everything else” to render a canned report useless.

A lot of reporting is simply ad hoc

Some systems might have an interface to develop reports. However, the type and range of possible queries are so varied, that a completely generalized interface would be far too complex – take another look at the screencap of the Drupal Views interface from Content Aggregation, and increase the complexity an order of magnitude or more.

Additionally, some editors simply don’t understand all the intricacies of their content or query logic enough to be trusted to build a report they can depend on. If they don’t understand that content Pending Approval can also technically be considered to be in Draft, then they might construct and depend on a report that’s fundamentally invalid.

In these cases, a competent API (as discussed in the previous section) coupled with a solid reporting framework is the best solution. Developers who have good searching tools and a framework to quickly build and deploy reports can hopefully respond quickly to editors’ needs for developing reports when required.

Content Search

We’ve discussed variants of search in prior sections – in Content Aggregation we discussed searching for content as a method of aggregation, and we just discussed searching in terms of a system’s API or reporting. However, search in these contexts was “parametric” search, or searching by parameter.

This is an exact, or “hard,” search. If you want a list of all content published in 2015, reverse-ordered by date, then that’s a very clear search operation that’s not subject to interpretation. The year – 2015 in this case – is a clear, unambiguous parameter, and a content object either matches it or doesn’t. The ordering is also unambiguous – dates can easily be reverse-ordered without having to resort to any interpretation.

Content search is the opposite. This is the searching that users do for content – the ubiquitous search box in the upper-right corner of the page. This is a “soft” search, which is inexact by design. The goal is to interpret what the user wants, rather than do exactly what the user says. The results provided should be an aggregation of content related to the query – even if not exactly matching the query – and ordered in such a way that the closest match is at the top.

Search can be very vague and idiosyncratic to implement. Editors and content managers often have specific things they want to see available, and this is exacerbated by “the Google Effect,” which postulates that anything Google does simply increases our expectation of having that feature in other contexts. Google offers spellchecking, so this must be a simple feature of search, right? Google does related content, so why can’t we?

Requested features of content searching can include any of the following:

- Full-text indexing: Return results for nonadjacent terms (for example, content that includes the phrase “fishing in the lake” will match when someone searches for “lake fishing”).

- Spellchecking and fuzzy query matching: Understand search terms that almost match and account for them.

- Stemming: Conjugate verbs and normalize suffixes (for example, a search for “swimming” also returns results for “swim” and “swam”).

- Geo-searching: Search for locations based on geographic coordinates – either distance from a point, or locations contained within a “bounding box.”

- Phonetic or Soundex matching: Calculate how a word might sound and search for terms that sound the same.

- Repository isolation: Search only a specific section of the content geography.

- Synonyms and authority files: Specify that two terms are similar and should be evaluated identically.

- Boolean operators: Allow users to add AND, OR, and NOT logic to their queries.

- Biasing: Influence search results by increasing the score for content related to a specific search term, and perhaps allow editors or administrators to change bias settings from the interface.

- Result segregation: Allow for the visual separation of specific content at the top of the results.

- Related content searching: Suggest content related to the content a user is searching for (“show me more like this”).

- Type-ahead or predictive searching: Attempt to complete a user’s search term in the search box while the user types.

- Faceting or filtering: Let users refine their searches to specific parameter values (this represents a mixing of parametric and content search paradigms).

- Search analytics and reporting: Track search terms, result counts, and clickthrough on result pages.

- Stopwords: Remove common words from indexed content.

Some of these might seem bizarre or esoteric, but this is simply because most users don’t realize that they’re implemented in search engines without being announced or obvious. These technologies have been advanced to the level that they’ve become an inextricable part of our expectation of how search works.

Now consider the hapless CMS vendors who have to implement and duplicate these features in their systems, out of the box. Commercial search systems exist that rival and exceed the complexity of many content management systems. The average CMS vendor will never be able to compete, especially considering that search isn’t the core function of their product.

For this reason, search is likely the feature most often implemented outside the CMS itself. Large CMS implementations usually have search services provided by some other platform, not built into the CMS itself. This has exacerbated the position of the CMS vendors – since many customers look elsewhere for search, there is even less incentive for vendors to spend a lot of time working on it.

This is magnified even further by the difficulty of evaluating search effectiveness. When we see a page of search results, how often do we spend time evaluating whether or not it’s accurate, or whether or not the results are in the most correct order? By design, this type of search is fuzzy and inexact, so we’re likely to simply accept the default results as optimal since we assume the vendor knows more than we do. Vendors will often simply allow (or even encourage) users to continue to think this.

The result is that search is a feature where CMS vendors simply seek to “check the box.” They usually implement search superficially, just so they can say their products have it. They hope that their implementations will suffice for 90% of customers (which is often true), and assume those who have more advanced needs will use another product for search.

Finally, understand that the underlying search technology is only one part of the user’s search experience. An enormous amount of the value from search is driven by the user interface. How are the results displayed? How does the predictive search work? How well can users refine their queries? These are fundamentally user experience problems that a searching system cannot solve, and that are fairly specific to the implementation. It’s always a bit dangerous for a CMS vendor to add client interface functionality because it runs a very real chance of conflicting with the customer’s design or UX standards (remember the discussion about “infiltrating the browser” from Output and Publication Management).

What support can the CMS offer in these situations? The most crucial is a clear API that allows developers to customize search features as the editors and content managers desire and as the users need. Alternately, the CMS needs to provide hooks and events to which a developer can attach an external system to allow for searching to be powered by a separate product.

User and Developer Ecosystem

This might seem to be an odd “feature” with which to round out this chapter, but the support and development community surrounding a CMS platform is perhaps its most important feature. There is simply little substitute for the support, discourse, and contributions of a thriving community of users and developers who assist others.

Vendors can help or hamper this effort. Most vendors will provide an official community location for their users through forums and code-sharing platforms. If a vendor does not, one might spring up organically, though its existence might not be known outside a smaller group.

Vendors can further support the community by participating in it. Several CMS community forums are patrolled in part by the developers behind the products, which provides a backchannel support mechanism and, perhaps more importantly, gives these developers a front-row seat to observe the struggles of its users and the ways in which the product should be developing to meet their needs.

Developers contributing code to the community is a huge advantage that can be measured in raw budget. You are often not the first organization to try to solve a particular problem, and tested, vetted code for your exact situation might already exist, saving you the expense of (re)building it. (I maintain that there are few problems that a contributed Drupal module doesn’t already exist to solve.)

When evaluating a CMS, evaluate the community in parallel. It will likely have an outsized impact on your experience and satisfaction with the platform.

Multilingual content management was originally much more advanced in systems coming from Europe, because it had to be built in from the start. Europeans are more multilingual than the rest of the world due to the sheer number of different languages spoken in close proximity. As such, the CMS coming from Europe a decade ago tended to be multilingual from their roots, while systems from the rest of the world often had to retrofit this feature later in their lifecycles.

I’ll assume English for the purposes of this chapter, though I apologize for the ethnocentrism.

While it would make sense to simply list languages in order of preference, the “q=” indicates the “quality factor,” which is the order of preference for languages. Why the complication? It’s related to a larger concept of “content negotiation” built into the HTTP specification. Other content variations, like quality or type, might be based more granularly on the quality factor. In practice, however, content negotiation is rarely used at this level.

Technically, the correct status code should be “406 Not Acceptable,” which means that content can only be produced with “characteristics not acceptable according to the accept headers sent in the request.” However, this is rarely used and might be confusing, so most sites will return a 404 Not Found.

This is common with “language families” or “proto-languages,” which are groups of similar regional languages. For example, Norwegian, Swedish, Danish, Faroese, and Icelandic are all vaguely related to a historic language called Old Norse. Most European languages are also considered to be Germanic, and share many common features (large portions of the alphabet, punctuation, and formatting, such as left-to-right reading direction).

Though not strictly related to your CMS, a little-used variant of the LINK tag can be used to point to equivalent content in other languages: <link rel="alternate" hreflang="se" href="http://se.mywebsite.com/" />. Some search engine indexers will use this to identify the same content in multiple languages.

This is more accurately referred to as “culture translation” rather than “language translation.”

These settings are often referred to as the “locale settings” of a user.

Which, incidentally, might be one reason why Germans use Twitter at a rate markedly less than other countries. It is much harder to shoehorn the German language into 140 characters than other languages.

Several years ago, a system with a list price of $4,999 released a marketing and personalization add-on module at a list price of $14,999 – three times the cost of the CMS itself.

The generic term “personalization” once referred solely to known personalization when that was the only type available. However, when anonymous personalization tools began to hit the market, the accepted meaning of the term changed, and now it’s more commonly used to refer to anonymous personalization. The implication is that known personalization is such an obvious and accepted feature that it no longer requires a differentiating name.

Note that user tracking is limited by browser technology and privacy safeguards. A user with strict privacy settings, using a different browser than usual, or even clearing the browser cache will likely disrupt any attempt at this type of personalization.

This is surprisingly easy information to get using a combination of geolocation and freely available weather web services.

If personalization interests you, I highly recommend The Filter Bubble by Eli Pariser (Penguin), which delves deeply into the sometimes sinister ways websites and companies use personalization, and the resulting changes to our culture and opinions.

“Semantics” is the study of meaning. To describe something as “semantic” is to say that it provides some larger meaning beyond its original or obvious purpose. The actual purpose of a URL is simply to identify content. A semantic URL provides some indication of what the content is, in addition to identifying it.

There might also be negative SEO implications to having the same content available under more than one URL.

So-called “affinity sites” are common. My company once performed an implementation for an organization that sold branded financial products. They had 86 individual websites in the same CMS installation, all of which shared 90% of the same content, with just styling changes and minor content changes to differentiate them.

Ad hoc is Latin for “for this purpose,” and generally means “something done for a specific or particular reason without prior planning.”

"The PageRank Citation Ranking: Bringing Order to the Web,” (PDF) January 29, 1998. Interesting side note: the original patent for this was actually owned by Stanford itself. See "Method for Node Ranking in a Linked Database,” September 4, 2001.

Yes, the name is unique. Doug Cutting, the original developer, took inspiration from his wife’s middle name, which was her maternal grandmother’s first name. It appears that the last time “Lucene” was even vaguely popular as a girl’s name in the United States was in the 1930s.