Content Reuse and The Problem of Narrative Flow

Reusing content across multiple channels is the Holy Grail of content management. But it’s not that simple. For certain types of content, it’s very hard to do without alienating your audience.

The other day, we got a question from someone: could text content be effectively managed down to the individual paragraph level? This has come up before from clients trying to avoid duplicating content, but, in the end, it was always determined that the benefits of the few scenarios where it would…

The document discusses the challenges of managing content down to the individual paragraph level for effective reuse. It highlights that while this method has been successful in the technical writing field, it requires significant investment in technology and training, and the content must be well-suited to this model. The document also highlights the challenges of maintaining narrative flow and maintaining coherence when chunking and re-using content, and suggests that the best suited content is highly structured, such as technical documentation.

Generated by Azure AI on June 24, 2024The other day, we got a question from someone: could text content be effectively managed down to the individual paragraph level?

This has come up before from clients trying to avoid duplicating content, but, in the end, it was always determined that the benefits of the few scenarios where it would help were outweighed by the numerous drawbacks it would introduce, so the idea always got dropped.

Nevertheless, it’s seductive. In fact, content reuse has been one of the implied promises of the content management world ever since it was born. This was summed up in a Twitter exchange about the famous NPR COPE example.

That launched countless ill-fated single sourcing efforts. Single-sourcing is the white whale of our industry. – Jeff McIntyre

In general, I agree. Content reuse often gets taken to an extreme where too many penalties are incurred trying to avoid ever duplicating any content. We lose sight of the forest for the trees and start paying ridiculous costs for relatively small savings.

But, in this particular case, the request didn’t seem so far fetched. This company generated content as a business model. They made money by creating content products around a common subject. In many places, information from Content A was duplicated in Content B, and could we limit that by chunking content and managing it down to groups of sentences which could be assembled into new content products?

The ultimate dream, of course, would be to assemble quality content while rarely having to write anything new. Just throw together references to small content chunks which already exist, and – voila! – out comes fresh, new content. In this particular instance, with this particular client, this might actually be a great outcome. But was it actually possible?

I decided to find out. What followed was a week of talking to as many luminaries in the field as I could find and call in (or beg) favors from. For this post, I thank people like Rahel Bailie, Jeff McIntyre, Tony Bryne, Sara Wachter-Boettcher, Molly Malsam, Ann Rockley and others.

Here’s what I learned.

In a nutshell, content can be managed down to that level. But it’s not easy, it requires considerable investment in technology and training, and the content has to lend itself to that model. The sad fact is that not all content – relatively little, actually – works well for this.

But let’s back up –

Content reuse is not new.

Technical writers have been doing this for years. – Rahel Ballie

And Rahel would know, as she’s spent decades in that field. Indeed, in the tech doc world, this is de rigueur. There are even standards for it – DITA and S1000D to name two. These are markup languages designed to let you break content down into tiny pieces, then extract that content and reuse it somewhere else.

But it’s not easy. There are a number of issues with this that go beyond the choice of markup language.

Your authoring tool has to enable this. There are tools specifically for authoring “structured content” – apps like Author-It, Serna, and Framemaker. These tools are designed to allow you to embed Content A in Content B easily and on-the-fly while you’re writing, with minimal disruption.

Your authors have to be trained to use these tools. They have to understand models for reuse – when it makes sense and when it doesn’t – and be able to reuse content in the correct context and locations. (And write content for effective reuse – much more on that later.)

Your CMS has to be designed for this. You can only get so far with a mainstream CMS. If you want to do seriously granular content chunking, you’re going to need one of the “component content management systems” which are quite popular in the technical writing world – systems like Vasont, IxiaSoft, and DocZone. Never heard of them? You’re not alone – it’s a pretty esoteric field. These systems specialize in managing and providing access to content down to the paragraph, sentence, and sometimes word level.

Content production gets complicated. You might need variable content that operates on logical rules. During some batch process, Content A will be included in Content B, but Word X will be replaced by Word Y if Content C has already been included and Flag Z has been set on the document. Does that sound like programming code? It’s not that far off – when you start treating content like little nuggets of data, you end manipulating it in the same way a programmer might.

Also, you suddenly need to be cognizant of versioning issues that arise from embedding. If you have a chunk of content reused in 150 different documents, this presents some interesting challenges. If you want to change that content, you run the risk of disrupting 150 other pieces of content. Can you be sure your change will work in all cases?

What if you want to start with the original chunk of content, but change it slightly for a specific usage? (“Derived usage,” as Ann Rockley calls it.) Can you branch it, and start a new set of versions for that particular usage? And what if your change isn’t meant for one specific location in which it’s embedded? Can references to that content be to a specific version of that content, rather than the piece of content in general?

The complexity of your management starts scaling upward pretty quickly as you break content down. You could have a single content item made up of dozens of sub-items, across multiple versions of each, governed by dozens of rules, and assembled in batch.

Tony Byrne gave me an idea of the pain this can cause:

Taken to its logical extreme, you could chunk even at the sentence or noun level. Which is where the whole concept starts to become insane for nearly all use cases. After the mega-chunking craze hit ten years ago, all these web teams had hangovers about trying to manage, let alone display, highly decomposed content. There were even IAs who specialized in “upchunking” – making content models more coarse-grained so that they could actually be managed.

Tony is awfully consistent here – ten years ago, he said the following in an article in EContent Magazine:

Most organizations tend to initially over-complicate their structural formats, which can be overwhelming for content contributors and editors heretofore unfamiliar with working with atomic snippets. After soul-searching on what level of granularity is required to achieve a competitive advantage, many organizations inevitably accept some compromises […]

Confused yet? There’s a lot to it. But, again, folks in the technical writing field have been doing this for years to great effect.

So, if it works there, should that success extrapolate to all content? Nope.

Why? Because technical writing documentation is a style of content that lends itself well to content reuse. I cannot emphasize that enough – the style of content will drastically impact your ability to reuse it. The fact is, once you leave the documentation world, it gets much harder to chunk content to that level and reassemble it coherently. The reason why this is true requires us define a couple terms: “content boundary” and “narrative flow.”

A “content boundary” is a cognitive border around a piece of content. Its the mental space you put between two pieces of content.

This blog post, for example, is a single conceptual thing in your mind. You may have read other blog posts in your life – maybe even other posts on this site – but they are not this post. For you to read those posts, you would stop reading this one, and mentally transition to another one. In the process of doing that, you cognitively “reset” yourself. You don’t expect another blog post to “know” about this one. It may be written by someone else in another style and tone. You understand this, and expect that you will have made a contextual shift.

Conversely, a single word in this blog post has no content boundary around it. In your mind, it’s the same conceptual thing as the rest of it. Same with sentences and paragraphs. They are mentally considered to be within the larger boundary of the blog post you’re reading with now.

“Narrative flow” is the concept of multiple sentences and paragraphs of text flowing together into a coherent block of content within the same content boundary. Text that flows has the same style, tone, tense, pacing, and general feel to it. It’s a very subtle thing, but if the narrative flow is interrupted by text that doesn’t match, the effect on the reader is jarring.

Take this blog post again – I did some outlining before I wrote it and some proof-reading and re-arranging after I was done writing it, but it was otherwise written in a single session, from start to finish by a single person. While I’m writing this paragraph, I know what I wrote in the last paragraph and I have a very good idea of what I’m going to write in the next paragraph. Therefore, I can use things in this paragraph like callbacks or foreshadowing, I can match the style and tone of other paragraphs, and I can avoid repeating something I said earlier. This paragraph exists as part of a narrative that flowed forth from a single mind.

As a reader, you expect narrative flow within content boundaries. You expect this blog post to flow from the beginning to the end. If it doesn’t, you notice.

The same isn’t true if you shift content boundaries, even within the same page.

Consider this paragraph. It’s offset with a different font spacing and shading. Whether you realize it or not, this paragraph exists in a slightly different content boundary than the paragraphs before or after it. This paragraph could be radically different in style and tone and you’d be more forgiving of that, because it’s visually set apart and is therefore – to your mind – a different piece of content than what comes before or after.

Mentally, the reading voice in your head may have even changed tone while you were reading that box of content. Now you’re reading this in the “normal” voice again.

Blockquotes are another example:

A hungry monkey will not wait for exact change from the pizza guy.

What does that have to do with anything else I’ve written? Nothing. But it’s a blockquote, so in your head, you crossed a boundary when starting it and crossed back over when finishing it. It’s a different thing and that’s fine – tone, style, and even subject can shift and you’re okay with that. If that paragraph wasn’t blockquoted, you’d have been like…uh, what?

A Heading Does the Same Thing

You might think this paragraph starts something new, like this is the next section of this blog post. It’s not, but the heading made you think it was. You crossed over a boundary. (But it was fake, so come back …)

Look at the “###” in the press release below.

That’s a boundary. Mentally it tells you, “we’re done with the actual content of the release; this next paragraph is something different.”

- How about bullet points? This text is in a bullet point, so mentally, it should be a single, compartmentalized thought, more or less unrelated to the thoughts around it. (I wrote an entire blog post on this a few years ago.)

However, there are no content boundaries around paragraphs in an extended narrative. If you have a block of text that’s an unbroken series of paragraphs, then they all exist within the same content boundary and the reader expects them to be part of the same narrative flow. If one of them differs remarkably, it sticks out and you don’t forgive it.

And this is why it’s so hard to manage content down to the paragraph level. In technical documentation (where it works), content elements are often surrounded by their own content boundary and thus the reader makes them mentally “exempt” from the narrative flow. They “forgive” disruptions to the flow.

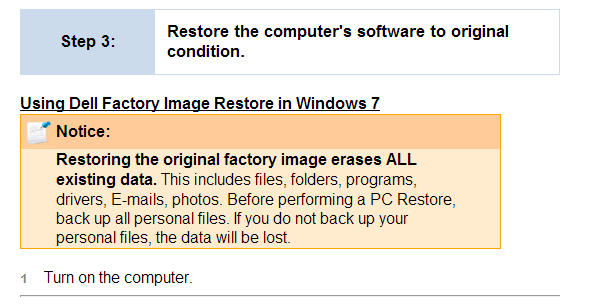

Consider this from a Dell support page:

See the peach inset box? That content is likely managed somewhere else and inserted here and in several other pages of content. It is a single, self-contained, encapsulatable piece of content which doesn’t depend on anything before or after. Visually, it’s set off in its own content boundary. As you start reading it, you’re mentally crossing a boundary, and you’re fine with changes in style and tone, and you have no expectation that it’s going to reference anything outside its boundaries.

To effectively manage content down to the paragraph or sentence level and re-use them in extended narratives, you would have to make sure each one was completely self-contained and match the style, tone, and tense of everything before or after. This is not easy.

Ann Rockley said as much to me the other day

You cannot arbitrarily chunk information and just assume it will be coherent when reassembled…There’s a reason why companies pay humans to do this.

The software vendors agree – all of them tend to couch their marketing in writer-centric terms. They position their value-add as providing assistance to writers, and I didn’t see one demo or video that showed overt encroachment on the writing process. Everything I saw showed blocks of content being inserted in and around significant narrative content provided by a writer – content that is presumably part of the narrative flow of that content boundary.

From Paul Trotter of Author-It:

[…] humans can never be replaced in the content authoring or translation process as it is a cognitive process that requires understanding of the meaning and voice/tone behind the content not just knowledge of the meaning of words and the statistical likelihood of their arrangement.

Narrative flow is a subtle thing, and taking arbitrary content and “making it flow” is something that it takes a human to do. A few months ago, in fact, we talked about software that was letting computers write news stories. The catch was that it could only write coherent stories about highly structured events:

[…] finance and sports are such natural subjects: Both involve the fluctuations of numbers – earnings per share, stock swings, ERAs, RBI. And stats geeks are always creating new data that can enrich a story. Baseball fans, for instance, have created models that calculate the odds of a team’s victory in every situation as the game progresses. So if something happens during one at-bat that suddenly changes the odds of victory from say, 40 percent to 60 percent, the algorithm can be programmed to highlight that pivotal play as the most dramatic moment of the game thus far.

Technical documentation is something else that’s far more structured than other kinds of writing. There are sections and subsections and tables and lists of tasks, etc. Each of these things brings with it some kinds of content boundaries that encapsulate it, and allow it to stand on its own, and thus be managed somewhere else and then reused.

But outside of technical documentation (or other highly structured content), it gets harder. Earlier, I said this:

The ultimate dream, of course, would be to assemble quality content while rarely having to write anything new. Just throw together references to small content chunks which already exist, and – voila! – out comes fresh, new content. In this particular instance, with this particular client, this might actually be a great outcome. But was it actually possible?

(Don’t look now, but that was a callback to something else in the narrative flow of this content boundary. Just sayin’…)

To do this, you quickly come to a sad conclusion: all content chunks would have to be written to be completely and utterly self-contained. They would have to maintain a consistent tone and style, would have to avoid referring to anything outside their boundary, and could never assume any level of knowledge or background like that which might have taken place in a few paragraphs of prior exposition.

Your writers would have to be indoctrinated into this style of writing. They would have to become used to stripping out all the narrative juice from their content lest they accidentally make it less reusable.

I’m reminded of a quote Josh Clark gave me once when we were talking about structuring content.

[Structuring content ] forces editors to approach their content like machines, thinking in terms of abstract fields that might be mixed and matched down the road.

The likely net result? Choppy, stilted content which might even be incoherent in some places. You might get to the editorial equivalent of The Uncanny Valley.

The uncanny valley is a hypothesis in the field of robotics and 3D computer animation, which holds that when human replicas look and act almost, but not perfectly, like actual human beings, it causes a response of revulsion among human observers.

And that’s where you’d probably end up – with slightly odd text that sort of seems right, but the reader notices that there’s something weird about it, and so doesn’t mentally engage with it. As they read, they keep stumbling over a growing…weirdness, as reused content keeps upsetting the narrative flow. Shortly thereafter, credibility goes out the window.

Technically, it might work, but you’d pay a vast price in content quality to achieve gains in efficiency, and when you include training time, interruptions to process flow, and license costs for software, you’d likely come out on the losing end, cost-wise.

And finally, if I can get philosophical for a second, this conclusion is a bit sad from an efficiency and productivity standpoint, but ultimately redeeming from a human standpoint. Here’s why –

We engage most intensely with the written word when we perceive another human being communicating with us from the other side. We connect to this, and we’re mentally and emotionally sensitive to changes in this connection. If we’re reading a log file, for instance, then we have no expectation of this connection, so we never engage at that level. But if we’re reading a narrative, we make this connection and we hang onto it subconsciously. If it gets pulled out from under us, we’re jarred out of our engagement by something that can only be described as…betrayal. From that point on, we’re guarded, and we’ll be careful not to engage again.

Tell me: how much of a gain in efficiency is worth that?