The CMS Implementation

I have two teenage daughters. They’re obsessed with their future weddings. They’ve both planned out the perfect day dozens of times. When they ask why I don’t get nearly as excited about it as they do, I always respond the same way: “I’m less concerned with your wedding day than I am with the 50 years that come after it.”

In the process of building a content-managed website, organizations often get obsessed with finding the right CMS for their needs. They’re dazzled by sales demos and starry-eyed over the things they’ll do once it’s implemented. Emboldened by finding what they consider a flawless piece of technology, they rush into the implementation, then don’t understand why the reality of what they wake up with every day doesn’t live up to their dreams.

Identifying and acquiring a CMS is only the first part of building a content-managed website. It’s like spending hours and hours at the building materials store, identifying and purchasing everything you need to build a house, and having those things delivered to an empty lot. It doesn’t matter how many materials you have, or whether or not they are high quality – someone still has to build a house with them

What plays more into the success or failure of a website: the quality of the CMS, or the quality of the implementation? This is a hotly debated question. Can a fantastic CMS be ruined by a terrible implementation? And can a stellar implementation salvage what is an objectively poor CMS?

The answer to both questions is yes. The greatest CMS in the world can be rendered completely useless by a poor implementation, and a below-average CMS can be made surprisingly functional by a creative integrator who is willing to work around shortcomings.

Clearly, you’re ideally looking for both: a solid CMS coupled with a solid implementation. Even a simple understanding and acceptance of the fact that the implementation is just as important as the CMS itself will put you in the right frame of mind.

Principle Construction Versus Everything Else

An old adage of project management says if you want to know how long a project will take, “Add up the time you think it will take to complete all the tasks, then double it.” While clearly a joke, it’s often not far from the truth.

The amount of time required to implement a content management system is always more than the sum of its parts. Planning out every idiosyncrasy of an implementation before you start is not a straightforward process. During the project there will be bumps in the road that will lengthen the required time considerably: changes, hidden requirements, misunderstandings, staff turnover, bugs, and rework.

We tend to underestimate the time required by concentrating on the activities we consider “principle construction,” which are, by nature, development-centric. We look through the functionality required to finish the build, add up the implementation time, and think that’s everything that needs to be done.

Along the way, we forget things that fall outside the mainstream path. Things like:

- Environment setup, such as development, test, and integration environments, not to mention source control repositories and build servers

- Testing and QA, including the inevitable “fine-tuning” period in the days or weeks directly prior to launch when bug fixes consume the development team

- Content migration (a subject so chronically overlooked that I’ll devote an entire chapter to it)

- Editorial and administrator training – the initial editorial team, future team members, and ad hoc training on specific tasks

- Production environment infrastructure planning

- Deployment planning and execution, including initial launch and post-launch changes

- Documentation, either of the implementation itself or of the project process

- Project management, including progress reporting

- Internal marketing to affected staff and stakeholders

- User transition management, including moving user accounts, ensuring users are notified of changes, and password resets

- Load and security testing

- Transition of SSL certificates

- DNS changes and propagation

- URL redirection

These are all activities outside of principle construction, and they often get left out of estimates as a result. In some projects, the actual development becomes a minority of the work when compared to all the non-development work surrounding it.

That adage of doubling the initial effort estimate perhaps isn’t unreasonable after all.

Types of Implementations

At the most general level, CMS implementations can be grouped into three types, based on their relationship with the current website:

CMS only, or “forklift” In this case, the goal is for nothing on the website to change – the design, the content, and the information architecture will carry over identically, but the CMS powering the website will be swapped for another (or, less commonly, a statically managed website will have a CMS introduced). In this type of implementation, the current website is the model for the new website.

CMS plus reskin or reorganization: This is an extension to the forklift implementation where the organization decides to do some light housekeeping, as they’re going to the trouble of implementing the CMS. New designs are often applied since templating has to be redone anyway, and a content migration often means that content can be cleaned up or reorganized without considerable extra work. Some things will change, but the changes are limited to styling and editorial, which means the current website is still somewhat relevant as a model.

Complete rebuild: In these cases, the entire website is reenvisioned. The new CMS might be only one part of a larger digital turnover. The website will get a new design, new content, new information architecture, and new functionality – little or nothing of the old site will remain. The CMS implementation often comes after a large content strategy and UX planning phase. Clearly, the existing website is irrelevant as a model for the new website.

While the first type, the forklift implementation, might seem the most simple, it can be deceptively complex because you’re now faced with wrapping an existing base of historic functionality around a new CMS. The new system will invariably do things differently than the old CMS, and the website likely have adapted over time to fit how the old CMS worked. Implementation teams have often found themselves trying to backport existing functionality into a new system to replicate how a website evolved around an old system.

The edge case adage discussed earlier holds true here as well. Given a sufficient amount of time, editors will have found every nook and cranny of a CMS. As mentioned in Other Features, any given editor might only use 25% of the total functionality, but every editor might be using a different 25%, meaning the editorial team collectively expects all of the old functionality to work in the new system, sometimes in the same manner, even if that’s an inefficient way of accomplishing some goal.

On the other end of the extreme, a complete rebuild at least gives you flexibility to weigh new functionality against the new CMS that will power it. Weighing new design and functionality requirements against technical feasibility is an expected dynamic to a rebuild project, which gives an implementation team the opportunity to influence plans toward functionality the new CMS will support.

Preimplementation

Before you implement, you need to take stock of what you have to work with, then use that to develop a plan.

Discovery and Preimplementation Artifacts

The amount of preimplementation documentation depends highly on the level of change planned from the existing website.

For a forklift implementation, there’s a possibility of using the current website as a de facto requirements document. When simply swapping the CMS out from under an existing website, a project mandate might simply be to make things function the same as they have in the past. So long as the development team sufficiently understands the inner workings of the current site and someone from the editorial team is available for questions, this might be enough to suffice.

If the website is being reskinned or reorganized, some consideration needs to be paid to how the changes will affect the CMS. If the changes are limited to the design and content alone – and none of these changes require modification to the content model itself – then they might not be relevant to the development effort. For example, the developer doesn’t care if the design calls for a serif or sans-serif font, or if the content is written in the first- or third-person perspective. The development effort is the same.

Be careful here – design and content changes need to be thoroughly investigated for potential content model changes. Seemingly simple design and content changes might require significant underlying model changes to support, and these changes have an uncanny way of snowballing. Changing thing A suddenly forces changes to thing B and thing C. Changes beget more changes, until, two weeks later, you’re changing thing Z and realizing the initial change went much deeper than you thought.

For a complete rebuild, significant site documentation is required for an effective implementation. at a minimum, the development team will need the following:

- A set of wireframes that displays the layout of each major content type, including all relevant content elements.

- A set of functional requirements (or equivalent annotations to the wireframes) that explains how nonvisual functionality should work, especially navigation and contextual functionality in the surround

- A sitemap showing an overhead view of how all the content fits together and is organized

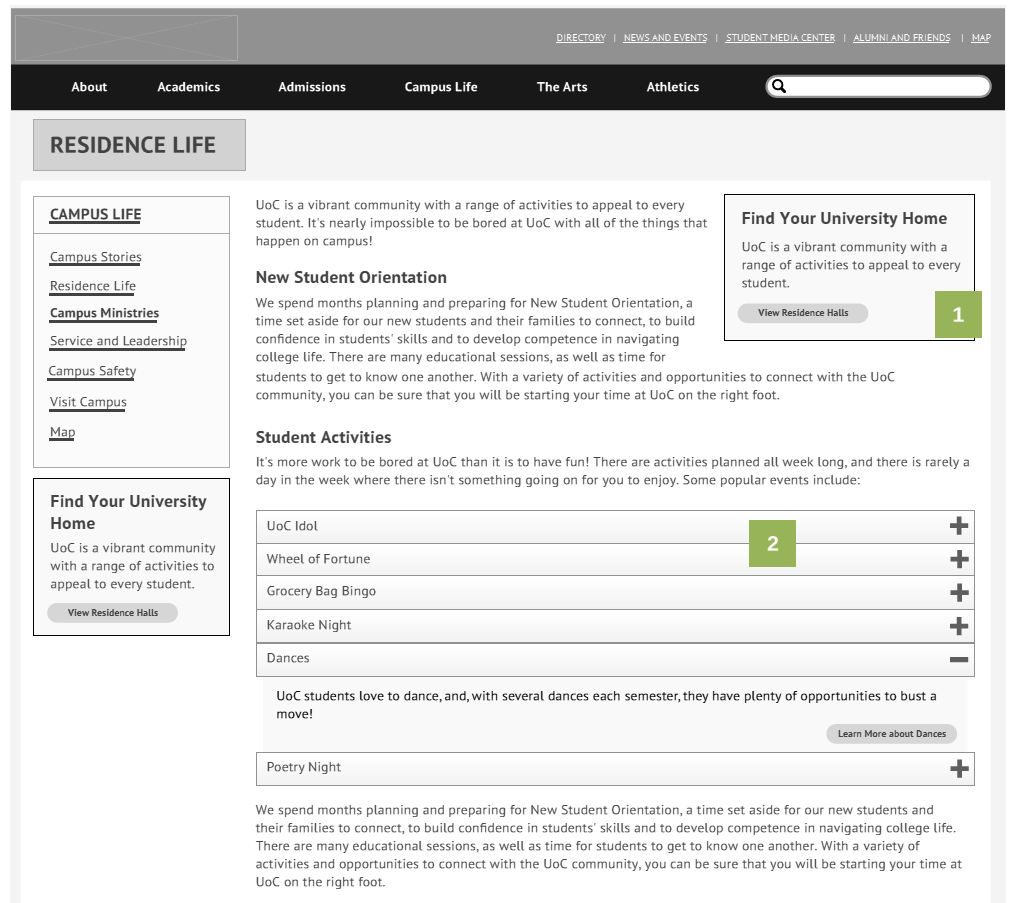

A sample wireframe with numbered callouts, which would normally be annotated with nonvisual information about how the elements function – wireframes are helpful to separate content and functionality from the finished design, which can be distracting when developing a technical plan

Depending on the scope of the integrator’s responsibilities, they might also need:

- A fully rendered design with all supporting art files

- Frontend HTML/CSS of the implemented design

Over the last half-decade, frontend development has become its own discipline. Implementations used to be achievable with a single set of development skills, but with the advent of responsive design and increased use of client-side technologies, the frontend development is now often assigned its own team. Additionally, many projects will have the frontend development completed before the CMS implementation begins.

The discovery phase of a CMS project is normally handled by a team of content strategists or UX/IA specialists who work with the organization to determine needs. A key point is whether this team needs to work with the knowledge of the intended CMS or not, if that information is even knowable at the time.

Some say that sites should be planned to be CMS-agnostic and that the focus should be on giving the organization the best possible website. However, a more practical school of thought says that if the intended CMS is known, then plans and designs need to be vetted for what can actually be implemented, which means filtering out idealistic and grandiose plans that can’t be brought to fruition. Planning a comprehensive personalization strategy, for example, is an expensive waste of time if your CMS doesn’t support personalization and you can’t integrate the necessary functionality from an external service.

If the intended CMS is known, it’s generally wise to have the implementation team review plans and designs for feasibility as they become available. Early discussions about hypothetical functionality can help ground the design team in a firm understanding of which ideas can actually work.

Developing the Technical Plan

There’s an old saying among trial lawyers: “Never ask a question you don’t already know the answer to.” Something similar can be said of implementations: “Don’t ever start implementing a wireframe that you don’t already have a plan for.”

At some point, the implementation team needs to review the preimplementation artifacts and come up with technical plans for everything in them. While it’s tempting to try to plan a site from the top down, it’s best to start from the bottom up. This means paging through the set of wireframes and asking a lot of questions about each.

These questions need to be answered in some form prior to development. In some cases, the developer will write a formal technical implementation plan (TIP). In other cases, the wireframes are simply reviewed in a “build meeting” to ensure understanding and to make sure there’s nothing present that isn’t implementable.

For each wireframe, consider the following questions:

- Is there an operative content object present? What type is it? What attributes does it need to support?

- Can a clear line be drawn around the operative content object and the surround? What content will be handled by the object’s template, and what content is in the surround?

- Of the content in the surround, what is contextually dependent on the operative content object?

- Of this contextual information, what will be derived from context or geographic position, and what is based on the discrete content present in the object?

- What aggregations are present? Can they be powered through geography alone, or will there need to be secondary structures created to support them?

- What non-content functionality is present? How will this execute alongside the CMS?

- How repeatable do the elements in this wireframe need to be? Are they just for this one page, or do they occur again and again?

- Is this wireframe literal, or simply suggestive of a wide range of possible manifestations? What areas on this wireframe might be swappable by editors?

- How often will this particular content need to be modified? Is this something editors will manage every day, or will this be set on launch and never touched again?

- How much relation is there to future functionality? Should this wireframe be interpreted narrowly as an exact, literal representation of what the site planner wants, or is it indicative or suggestive of other things?

These are all visual questions, driven by what’s actually on the wireframe. However, lurking below the surface are several questions about the content represented in the wireframe, which have nothing to do with the wireframe itself:

- What are the URL requirements for this content?

- What are the editorial workflow requirements? Will an editor create this content directly in the CMS interface, or is it coming from somewhere else? What approvals need to be in place for this content?

- Who should have permissions to this content? Who can create it, edit it, or delete it?

- Does the content have to be localized? Into how many languages? What non-text elements (images, for example) will also need to be localized?

- Does this content need to be versioned? Will it need to be archived at some point? In the context of this project and this content, what does that mean?

- What other channels does this content need to be published into? Will this happen on the same schedule? Does it need to happen at any time? Who can initiate this?

- Does this content exist now? If so, where is it, and how can we get access to it for migration? What is the current velocity of this content – how fast does it change or turn over?

To this end, the most valuable question the developer can ask might simply be: “Where does this come from?”

For any element on the wireframe, the site planners, owners, or editors need to explain where it comes from. Is it managed content? Is it from the operative content object? Is it from another content object? Is it from an external data source? Is it contextual logic, like related content or sidebar navigation? If so, on what data is this logic based?

They do not need to have complete technical understanding (this is the developer’s job), but they need to have at least some logical idea of where the content sources from. If they don’t, the developer can back up and ask more general questions about the nature of the information:

- Is it specific to this page?

- Is it global?

- Is it based on the content type?

- Can everyone see it?

- Is it from someplace outside the CMS?

From the answers, the developer might be able to extrapolate some content model or method of populating the information. This should be repeated for every single element on the wireframe: every menu, every sidebar element, every snippet of text.

It’s easy to short-circuit this process. It can get tedious, and there’s a temptation to think, “Well, I’m sure someone has a plan for that.” Resist this temptation. The process of answering these questions is vital, both for the developer’s understanding and also for the editors and site planners. Many times, the provided answers will be in conflict, and you’ll uncover misunderstandings and incorrect assumptions.

Equally important as the actual answers, the directions these conversations go in will often reveal underlying motivations and goals. The answer to where something comes from will often lead to a discussion about why it’s there and what goal the site planner was trying to achieve with it. These discussions help provide context and background, which the developers can use later in the project when they need to make more intricate implementation decisions.

By starting at the bottom and proceeding through the wireframes one by one, larger top-down questions will slowly begin to fill in. Once every interface has been reviewed, the team can back up and look at larger questions such as:

- What is the shape of this content? What content geography is needed to support it?

- What does the aggregate content model look like? Can types be abstracted to base types from which other types might inherit? Can type composition be used to simplify the model?

- What content appears to be global to the installation, and where will that content be stored?

- What larger, nongeographic aggregation structures are needed? Is there a global tagging, categorization, or menuing strategy that ties the content together?

- What is the overall need for page composition? How much of the site is templated, and how much is artisanal?

- What do the aggregate localization requirements look like? How many languages will need to be supported, and how should language preferences and fallbacks be managed?

- What does the user model look like? How many different user groups will need to be created, and what reach will each of them have?

- What external systems need to be integrated with? What APIs are available, and what access is allowed? Can this information be retrieved in real time, or does an import strategy need to be defined?

- What does our overall migration strategy look like?

- If the target CMS is known, how well does the revealed functionality overlay on what’s available out of the box? How much customization will be needed to complete the implementation?

- If the target CMS is not known, what CMS might be a good fit?

The answers to both the big and small questions collectively form the technical plan. This plan will drive the implementation process and the scoping and budgeting process.

Taking the organization and the team into account

It’s important to understand that answers to the questions in the previous section are simply not universal. Many are contextual to the specific combination of this organization, this particular team, and the long-term plan for this website. The same site plan for combination X might be implemented differently for combination Y.

Things the team will need to take into account during a feature-level analysis include:

- How much budget is available for implementation?

- How experienced are the editors? How well can they be trained and be expected to understand technical concepts?

- What portion of the budget will a particular feature consume, and does that need to be balanced against its value? Can it be responsibly implemented at a lower level of functionality or polish to save budget?

- To what extent does the organization want to make structural changes to the site without developer involvement? To what extent will it have users who understand HTML or CSS enough to share some responsibility for output?

- How long will this implementation be used? Is it permanent or temporary?

- What is the future development plan? Is there a phase 2? Does the organization plan to invest in this site over the long term, or is this a one-shot effort? What level of internal developer support does it have?

Here’s an example.

Say the site design calls for numerous text callouts, all with different styles. Should there be a visual “palette” of different styles that editors can browse through and select one with a mouse click? Or, on the opposite end of the scale, can these editors be trusted with a simple text box in which to type in the name of a known CSS class that will be applied to the surrounding DIV?

The former is clearly more user-friendly and polished, but it also may cost considerably more to implement and be less flexible. With the second option, new CSS classes might be created on the fly, and editors can simply type them in, while with the first option, the style palette might have to be manually changed to represent a new style.

Some editors want the most user-friendly, controlled experience possible. Others want to get “close to the metal” and have more manual control over these things. These editors might resent being spoon-fed options, and be annoyed that they can’t just type in a CSS class that they know exists. Only knowledge of the editor’s preferences, skill, training, experience, and governance policies can help you make this decision

The usage of Markdown and other markup formats is another clear example. Some editors enjoy the precision and speed that Markdown brings with it. Other editors expect WYSIWYG editing, and might consider Markdown as “low rent” and even question why such an expensive CMS or implementation isn’t competent enough to feel like Microsoft Word. In these situations, does the technical plan acquiesce to what the editors expect, or does the case need to be made for the alternative?

This opens up much larger questions to do with user adoption and internal marketing, which are crucial but beyond the scope of this book. Perhaps 10 – 15% of the effort for any implementation might be social engineering and training to get users on board, both with the system itself and with the decisions that were made during the implementation. Back to our example, do editors simply need to be trained on Markdown, and educated about its benefits and why it’s the right solution for this situation? How far down that rabbit hole is the team prepared to go? Will they need to “walk that decision back” to larger concepts like the separation of content and presentation?

The end goals of a project will also exert an influence on implementation decisions. Consider these two projects:

- A temporary promotional microsite to support a single conference event with content that won’t change. This site needs to be launched very quickly, and will stay up for six months at most.

- The main website for the organization. There will be thousands of pages of content, authored by dozens of editors of varying skill levels turning over content multiple times per day. This implementation needs to be functional and relevant for at least the next five years.

These two scenarios will likely result in drastically different implementation decisions. For the temporary microsite, corners might be justifiably cut for the sake of budget, time, and the fact that more complex implementation won’t provide much return on investment. However, the main site of the organization has a much longer time span and a larger, distributed editorial base. For this project, a more measured implementation is required. Deeper investments in usability and flexibility will have the breadth and time required to provide value to justify their expense.

The urge to generalize

Developers love to generalize. Specificity gives us a lingering unease, like we’re building something too tight around a set of requirements, and what if there’s more functionality to be gained by loosening up a bit?

Additionally, developers love to deal in abstractions. Yes, this particular content object is of the type Article, but it could also be considered of the type Web Page, and even more abstractly, it’s an instance of some root, generic type like Content Object.

This manifests itself as a desire to interpret wireframes and site plans, and generalize them in such a way as to handle situations that aren’t explicitly called for. Developers have mental conversations like this all the time:

Well, a News Article is really just a Page with a Date and Author. If we add a date field to the Page type, then we can collapse those two types into one. And then, in the future, they can create mixed lists of Pages and News Articles. For that matter, I wonder if we shouldn’t just make Help Topic a Page too. We could make just the Subject attribute a category assignment, and now we have one less type and those can be added to lists too. Plus, they could add Subjects to other types of content too. You know, the home page wireframe didn’t call for it, but I could see them wanting to add these lists in the sidebars in the future.

Some of this might be interpreted as laziness, but it’s mostly a genuine attempt to enlarge the solution to encompass scenarios which the developer is projecting onto the users. If a user wants to do thing X, then the developer naturally thinks ahead that he might want to do thing Y in the future, and the developer can address that need in advance.

There’s truly nothing a developer likes more than hearing an editor say, “We’re thinking about maybe doing thing Y – “ and cutting them off, leaning back in her chair, and saying, “No problem. I figured you would want that so I already handled it for you…” [insert exaggerated, magician-like hand flourish here].

Some of this is healthy, but sometimes developers can be a little too clever. We’ve discussed previously how developers are used to thinking about complex information problems and dealing in abstractions. Occasionally they can be convinced that editors will share in that enjoyment and skill. But usually, editors like concreteness to the same extent that developers like abstraction.

Developers and site planners need to have productive conversations about whether the site plan is suggestive or literal, and those need to be followed by conversations with editors about things they might want to do in the future, and then those need to be followed by conversations with project managers about how these things might affect budget and timeline, both for the immediate project and potentially for follow-on projects as well.

In some cases, developers might need to be reminded that they’re not building a framework or an abstraction. They are, in fact, building an actual website with a finite set of problems to be solved.

The Implementation Process

CMS implementations can be difficult to generalize, but the following description is meant to be as inclusive as possible and to reasonably represent the significant phases through which an implementation will progress.

Environment Setup

In most cases, developers will develop the new website on their local workstations. They will submit their code to a central repository, which is a source code management (SCM) platform such as Git, Subversion, or Team Foundation Server. Multiple developers might be submitting new code, which is then combined and deployed to an integration server for review and testing.

Developers continue a cycle of developing new features, submitting their own code to SCM, and downloading code submitted by others to bring their local workstations up to date. Throughout, each developer maintains a fully functioning version of the CMS and developing website on his or her local workstation.

The process of deploying this code to servers is generically known as “building.” It is usually accomplished by tools called “build servers.” A build server is software running on the integration server that monitors the SCM repository. It detects new code submissions and launches a process that checks out the code and performs the tasks necessary to get it running on the server – compiling the code, deleting source files, copying the code to the web server directory, injecting license files, etc

In addition to the integration server, often a “test” server is used to provide a more stable environment. While the website is built on the integration server, new code (submitted often by developers, sometimes several times per hour) is deployed to the test server less frequently to maintain a semistable environment for testing. The test server usually has a build server of its own, but it’s either activated manually or connected to a different branch of the SCM repository where code is merged less frequently

Installation, Configuration, and Content Reconciliation

Once all necessary environments have been created, the CMS is installed and configured. This is less momentous than it sounds, as many CMSs will install merely through double-clicking an icon, or deploying files to the root of a web server and walking through an installation wizard

Some CMSs are designed as self-contained web applications that are purposely independent of anything else on the server. In these cases, the CMS isn’t installed on the server so much as it’s installed within the web server process. Others require a more holistic installation where background services and perhaps other files are stored outside the bounds of the web server. In some of these cases, these files and services can be used by more than one implementation of that CMS on the same server.

Once the installation is complete, the resulting website – simple as it is – will need to be checked into SCM, deployed to integration, and then checked out by other developers.

Reconciling the installation and its content between all developers working on the project can be a tricky phase. One of the perennial questions is how to handle the database that powers most CMSs. Does every developer keep their own copy of the database, or do all the developers talk to a central database? And which databases do the integration and test servers work with – their own, or a central version?

If each developer has a copy of the database, they’re free to work knowing that their changes won’t affect other developers. However, multiple database copies mean that everyone is working from a different copy of the content, and code changes requiring accompanying data changes might require these changes to be replicated on multiple versions of the database. Code might be deployed that breaks other developers’ installations because the accompanying data changes haven’t been made on their copies of the database.

This can be eased considerably by a CMS that stores and manages configuration as code. In some systems, creating a new content type is accomplished by writing code: in Plone, a new content type is defined as a new Python file; in Episerver, a new content type is a new C# class file. With these systems, deploying code and configuration are the same process. The act of a developer deploying code also deploys the configuration changes necessary for that code to work.

In other systems, new content types might be created by clicking through the administrative interface of the CMS. These type changes are stored in the database to which that copy of the CMS is connected. If a developer creates a new content type on his local workstation, he now has that content type definition local to his database. He either needs to recreate the type on the integration server (and then the test server, and then the production server…) or use some external tool to move that data from his database to another.

Finally, how do the developers account for content continuously created by the editorial team? If editors find a bug with new content, or a developer needs to embark on changes that require the latest content, how does the developer bring her local database up to date with production?

The process of “reconciling” content changes is the bane of CMS developers. While some systems have developed considerable technology to migrate content changes between environments, other systems simply have a developer community that has gotten used to manually pushing content around. Other CMSs have external vendors that specialize in tools specifically to solve problems of reconciliation.

Developers will usually work off a local database that slowly becomes more and more out of date until they feel it’s sufficiently “stale” that they need to refresh it from the production or test data, often via a manual backup and restore

A notable milestone in the implementation process occurs when all developers have a running copy of the codebase on their workstations, this code can be submitted and built successfully in all integration and test environments, and a plan is in place for data reconciliation.

Content Modeling, Aggregation Modeling, and Rough-in

Just like you can’t test drive a car without putting gas in it, you can’t develop a CMS without having content in it. The content types for the required model need to be created. Types need to be defined and properties need to be added, along with their accompanying validation rules. Custom properties might need to be developed, in the instances where the built-in properties run short.

In addition to the discrete model of each type, relationships between types and objects need to be defined, including properties that reference other objects, and relationships between content types. This means that at least some of the content tree will need to be “roughed in” to represent these relationships before further development can continue.

For example, if you’re publishing a magazine, you might have an Issue type, a Section type, and an Article type. An issue will have multiple sections as children, which will have multiple articles as children. You can’t work on templating or other functionality until you have a representative set of content roughed in, which means creating “dummy” content as a placeholder to continue developing.

Implicit navigation is similarly dependent on a roughed-in content tree. If the navigation for a site is rendered by iterating through the tree to form primary and secondary content menus, objects will need to be created before development can continue.

Note that it’s not uncommon to find problems during rough-in. Plans that seemed to make sense in the abstract may become clearly unworkable when actually creating content. Be prepared that some plans may need to be reworked during this phase. This is a natural part of the implementation process.

Other modeling tasks include:

- Defining permissible content aggregations: To continue the previous example, perhaps we stipulate that an Issue object can only contain children of type Section, which can further only contain children of type Article.

- Refining the editorial interface: Just creating the types and properties isn’t enough. The editorial interface needs to be considered from a UX standpoint: Are items labeled clearly? Is there adequate help text? Have unnecessary interface elements been removed? Have advanced options been hidden from users who won’t understand or shouldn’t have access to them?

- Defining permissions: This requires at least a minimal rough-in of the user model, so that groups can be given varying access to content. Specifically, you need to be careful of allowing delete permission, since many content objects will be the “load-bearing walls” of the content structure, and if they were deleted, then the entire site might stop working.

Beyond actual content, other structures might have to be created at this point to support various aggregations, including:

- Category trees and taxonomies

- Controlled tag sets

- Menus

- Search indexes

- Lists and collections

- Configured searches

When content modeling is complete, the basic content structure should be available, and it should be resilient, which means protected from careless (or even malicious) usage. Types should be validated correctly, any hierarchies or relationships should be enforced, and the editorial interface should be intuitive and safe for editors.

Early Content Migration

While the entire next chapter is devoted to content migration, it’s important to note that it should begin in some form as soon as a valid content model is available. Many implementations have run off the rails at the end when “real” content is migrated, revealing multiple problems.

Content migrated at this stage doesn’t have to be complete or perfect, but it needs to be pushed into the system regardless. There is just no substitute for having real content in a CMS while it’s being developed.

Early content migration can be considered “extended content rough-in.” Much like the continuous integration philosophy in development seeks to fully integrate code early and often, a “continuous migration” philosophy seeks to integrate content early and often.

The risk of not doing this is that the development team only works with a theoretical body of content. Sure, they’ve roughed some in to the extent they need to continue development, but it’s not actual content – it was contrived simply so they could keep developing, and it was created by someone with intimate knowledge of how the system works. Consciously or subconsciously, the roughed-in content was designed to avoid delay, not to accurately represent the real world.

Real content has warts. It frequently has bumps and bruises and doesn’t quite match up with what you envision. You need to account for missing data, varying formats, problems with length, missing relationships, etc.

The prior warning about developing in an “administrator bubble” holds just as true for content. Don’t develop in a “contrived content bubble.” Unless the development team occasionally have to stop and deal with content that is representative of what the site will eventually have to manage, they’re going to take liberties and ignore edge cases that will cause problems down the road.

Templating

By this point, the environment is set up, the CMS is installed, the content model is created, and roughed-in content is available.

Finally, it is time for templating. This is the moment you’ve been waiting for, when you actually get to generate some presentations of the content you’re implementing this system to manage.

Templating comes in two forms:

- The surround, which is the outer shell of each page

- The object, which is the specific object you’re presenting

Remember, the output of the object template is “injected” into the surround. Your object is templated, then this output is nested inside the shell of the surround.

Surround templating

In most cases, the surround has to work for all content. While having multiple surround templates can happen, it’s uncommon. In most cases, the template architecture has a single surround that is flexible enough to adapt to all content types. In some cases, surrounds can even nest inside one another, but this is also uncommon.

Templating the surround is unique in that it doesn’t execute in a vacuum. The surround is dependent on the object being rendered for information. The information can be of two types:

- Discrete: Information drawn from the value of one or more attributes

- Relational: Information drawn from the position of the content object in relation to other content

Additionally, the surround will often retrieve other content objects and data structures in addition to the object being rendered, to provide data for other sections of the page.

The primary navigation, for instance, is often explicit, meaning there is a specific content aggregation that drives it. Systems with a content tree might depend on the top-level pages for this, while other systems might have a specific menu structure of some kind that lists these pages.

Again, the “tyranny of the tree” can be a problem here – if the top-level pages are in that position for reasons other than being the primary navigation options, then how do you depart from this? It’s not uncommon to see explicit menuing for primary navigation, even in systems that otherwise depend on their tree for navigation.

For example, the surround often depends on determining the correct navigation logic. A crumbtrail, clearly, only makes sense in relation to the content object being rendered. Where that object is located in the geography will dictate what appears in the crumbtrail.

Additionally, many sites have a left sidebar menu. How do you know what appears there? Is it dependent on the current content object in some way? For instance, does it render all the items in the same section or group of content as the primary item? Is the menu hierarchical – does it “open”? How do we determine where and how it opens? Based on what criteria?

Despite this emphasis on global and relational information, some items in the surround are more directly contextual to the content being rendered. For example, a common element in the sidebar (the ubiquitous Related Content, for example) is dependent on the actual content item being rendered. It will extract information from that object to render itself. Do all objects have this information, or does the element in the surround have to account for varying information, and even hide itself if the primary object being rendered is not of a compatible type?

A question often becomes: do elements like this get templated in the surround, or do we template them as part of the content object itself and then inject the results into the surround? Content object templates can often be divided into “sections,” some of which can be mapped to places in the surround. Thus, if the related content element only appears for one type, then perhaps the more appropriate place for it is with the object templating, rather than the surround templating. If this surround element is required in more than one template, however, this is an inefficient duplication of code, meaning it should be abstracted to an include or moved to the surround template

Object templating

Inside the surround lives the output created for a particular content object. This is usually far more straightforward than the surround, since the template knows what object it’s rendering and can make specific decisions based on safe assumptions about what data is available. The object template doesn’t have to work for all content, just a single content type.

Additionally, the operative object template doesn’t really have any dependence on the surround template. While the surround depends highly on the object, the inverse is usually not true. The object can often execute neither knowing nor caring what surround it will eventually be wrapped in.

Some content object templates are nothing more than one or two lines, perhaps to simply output the title and the body of a simple text page of content.

A single content object might have multiple templates for the same channel, depending on criteria. Most implementations will have a one-to-one relationship between type and template, but in some cases, the template might vary based on location or content, or on some particular combination of property values inside the content itself. (However, if several content types have multiple templates that vary considerably, some thought might be given to creating different types altogether.)

Templating (both the surround and the objects) can sometimes take the majority of a the development time of an implementation. With some teams, a single developer might be responsible for writing the frontend code (the HTML/CSS), while in other situations, a whole team might be responsible for that code specifically, and either provide it to the backend developers or create the templates themselves. If the latter, then the backend developer might “rough in” the templates, then provide access from frontend developers to complete the code.

When templating is complete, the site will be navigable with all objects publishing correctly, and will appear largely complete.

Non-Content Integration and Development

It’s common to find CMS implementations that don’t entirely start and stop with the CMS alone. Many implementations involve external systems and data, or custom-programmed elements not dealing with content.

For example, a bank might have a custom-programmed loan application on its website. This is a complicated, standalone application that has absolutely nothing to do with the CMS – it doesn’t use CMS content to render, and doesn’t create or alter any content by its use. It just needs to “live” in the CMS so it can be viewed alongside all the other content in the CMS.

The ability for non-CMS functionality to exist within a content-managed website varies. Some systems “play nice” with non-CMS code that needs to run inside it, but some systems are “jealous” and make it very difficult to do custom programming within the bounds of the CMS.

A developer can always write custom code outside the system, and simply have the executable application or files accessed without invoking the CMS, but a lot of CMS-related functionality is then lost. Ideally, the custom code can execute within the scope of the CMS and take advantage of the surround templating, URL management, permissions, etc.

Using this method, the output of our loan application could be “wrapped” by the surround, and by using a proxy content object, the page containing the application could be “placed” inside the CMS geography so the surround has context to work from when rendering.

Problems will arise when migrating from a different system running on an entirely different technology stack. In these cases, the application simply has to be rewritten to comply with the new systems, technology stack, which might be a considerable investment – perhaps even greater than the CMS implementation itself.

Some situations might require functionality to be hosted externally and brought into the website via reverse proxy, or – less ideally – an +IFRAME+. Even less desirable would be to have to transition the user to another website entirely (for example, “loanapp.bigbank.com” for the previous application example). Site transitions often produce jarring visual and UX transitions, along with other technical issues such as cookie domain mismatches and authentication issues.

The practice of content integration

These issues live under the heading of “content integration,” which is the process of combining external data with content in a CMS installation. There are dozens of models for accomplishing this, all with advantages and disadvantages, and as with everything, the best fit depends on requirements:

- Where does the external data live?

- How volatile is it? How often does it change?

- Does it need to be moved into the CMS, or can it be accessed where it is?

- If it needs to be moved into the CMS for access, what does the schedule need to be? How “stale” can it be allowed to get before being refreshed?

- Does each record in the external source correspond to a complete content object, or are we simply adding content to an existing object?

- What is the access latency? How fast can it be retrieved?

- How stable is the connection between the CMS and the data source?

- Will it ever need to be modified in the CMS environment and sent back?

- What are the security issues? Can anyone have access to it?

- Do individual records need to be URL addressable, or is the data only meant to be viewed in aggregate?

For example, universities often want to have their course descriptions available on their websites. However, these descriptions are invariably maintained in a separate software system. In these cases, a scheduled job might execute every night, detect course descriptions that have changed in the last 24 hours, and update corresponding content objects in the CMS with that data. The descriptions of the content objects wouldn’t be editable directly in the CMS, since that isn’t the “home” for this data, and they can be overwritten whenever the external data changes

The potential needs for content integration are so vast that the discipline largely boils down to a set of practices, tools, and raw experience that are applied individually to each situation.

Production Environment Planning and Setup

Sometime during the development process, the production environment needs to be planned, created, and tested, and the developing website should be deployed. Basic questions need to be answered about physical/logical locations, the organization’s relationship to the environment (both technically and administratively), and the technical parameters of the environment.

Hosting models

Common hosting models are:

- On-premise hosting, in the organization’s own data center

- Third-party hosting under the organization’s control, where the organization creates a hosting account on a platform like Microsoft Azure or Amazon Web Services and manages this environment directly

- Third-party “managed” hosting under external control, where the organization cedes control over the hosting to another vendor (often the integrator, as part of a package deal, or the CMS vendor)

Like almost every other type of application, CMSs are trending toward the cloud more than on-premise hosting. Financial institutions and the healthcare sector held out longer than most due to privacy concerns (or the perception of such), but even those organizations are slowly giving up on on-premise installations.

Vendor-provided hosting is becoming more and more common as CMS vendors seek to transition from product companies to service companies. Many are bundling hosting and license fees together in a SaaS-like model. Some vendors are selling on-premise licenses only when directly asked for them – new sales are assumed to be cloud sales.

Hosting environment design

The ease of setting up virtual servers and redundant cloud architectures has completely changed how hosting is initiated and managed. Setting up servers used to be a time- and capital-intensive process.

A full discussion of application hosting is beyond the scope of this book, and thankfully it doesn’t differ remarkably from hosting other types of applications. When discussing hosting, major points to consider and discuss with your infrastructure team include:

- Fault tolerance and redundancy: How redundant is the environment, and how protected is it from architecture failures? Perfect, seamless redundancy is clearly the ideal situation, but it’s rarely fully realized and is often expensive.

- Failover and disaster recovery: If something does go wrong, what’s the process to recover? Will you restore from backup in a new environment, or will you maintain a second environment with a version of your content that you can cut away to?

- Performance: How much traffic can the website handle? How is it load-tested? How fast can it scale to increased load? Can scaling be scheduled in anticipation of increased load? (For example, can you plan a temporary or “burst” scaling of the environment for 72 hours in anticipation of increased traffic after a major product release?)

- Security and access: Who has access to the server? How is new code deployed to the website? Who can approve those deployments?

Regardless of the model and technical features of the environment, the environment needs to be available far enough in advance of launch to be load-tested, have backup and failover procedures established and tested, and have enough test deployments to ensure the process is free from error. What you clearly don’t want is an environment made available the night before launch.

Once the production environment is available, ensure that code deployments have been pushed all the way through and the development team has verified the site is functional in the environment. Many launch plans have been scrapped at the last minute due to unforeseen problems caused by a production deployment happening for the first time.

Training and Support Planning

Editors and administrators will need to be trained in the operation of the new CMS. Two different types of training exist:

- CMS training: This is generic training on the CMS at its defaults, which means training on Concrete5 or Sitecore or BrightSpot or whatever system your website was implemented in. This is usually provided by the vendor.

- Implementation training: This is training on your specific website, which means understanding how the generic CMS was wrapped around your requirements, and understanding concepts and structures that might exist in your website and no other. This can only be provided by a trainer familiar with the implementation, since the vendor has no knowledge or understanding of how its product was implemented.

Both are valuable, though the latter is more important for most editors. Understanding how the underlying CMS works is not without merit, but editors will primarily need to understand how the features of their particular site were implemented. Relevant aspects of the underlying architecture can be worked into that training as necessary, but it’s common to have a select few “product champions” in the organization who know the basic system inside and out, and for the rest of the editing team to simply have specific knowledge of the work within their professional jurisdiction.

Beyond the initial training, a plan should be put together for training future editors. In larger, more distributed organizations (e.g., a university), new staff might be hired each month who will need training on how to add and edit content in the CMS.

In addition to formal training hours, an ad hoc training and support process needs to be considered. What happens when an editor has a problem, or needs more in-depth help with a content initiative? Who is available to walk editors through the system on short notice? And how will the CMS fit into the organization’s existing IT support infrastructure? Is the IT help desk aware of the new CMS? Do they know how to use it? How do problems get escalated?

Sadly, training is seldom given much attention, and many organizations do as little as possible in the hopes that it will all “just work out.” Remember that training is a direct foundation for user adoption. When users understand and are comfortable with the system, they are more likely to embrace it and use it to its fullest capabilities. More than one project has failed due to tepid user response that grew out of a lack of understanding and training

Final Content Migration, QA, and Launch

We’ll be discussing content migrations at length in the next chapter. However, sometime late in the development cycle, final content migration will begin, when content starts entering the system in the form in which it will remain.

This content will need to be QA’d and edited, sometimes considerably. This never fails to take longer than planned, and launch date extensions are common while the editorial team slaves over the content in an attempt to “get it in shape” enough to launch.

The tail end of a CMS integration is never relaxed. Usually, the editors, developers, and project managers are juggling an ever-changing list of QA tickets and content fixes. You need to budget for an “all hands on deck” approach during the last few days or weeks of a CMS launch. The editorial team needs to be on standby for emergency content fixes.

Final features might still be under development right up until launch, through it’s common for many features to be thrown overboard in the mad scramble to launch. Many get deferred until the ubiquitous “Phase 1.1” project that’s invariably planned for immediately after the initial launch (whether it actually happens or not may be hotly debated).

Launch can be a complex affair, depending on whether the new site is taking the place of an existing site in an existing hosting environment or is being deployed to a new environment. The latter is always preferred, since then launch is simply a matter of changing where the domain name (DNS) resolves, rather than having to bring the site down, perform an installation and regression test, then release it. Given the ease of setting up new virtualized environments, it’s usually more efficient to deploy the site to a new, parallel environment, launch via DNS change, and then simply archive the old environment.

If you’re depending on a DNS change, UGC might have to be shut off temporarily. During DNS propagation – a process ranging from instantaneous to lengthy (up to 24 hours), depending on the user – some users will be interacting with the new site and some with the old site. While the editors should be creating content on the new site exclusively, there’s no way to ensure the same for users. In some cases, a user might have a DNS lag, make a comment on the old site, then have the DNS change occur, leaving the user looking at the new site and wondering why that comment is missing.

Plan and rehearse site launches in advance. There’s nothing more frustrating than having everything ready to launch and finding out that the one person able to change a DNS record has gone on vacation. Walk through the launch ahead of time, down to a minute-by-minute schedule, if necessary.

A technical reviewer noted: “Without totally overdoing this metaphor, you could extend it to keeping the house clean. Companies implementing a CMS are often like a family building a new house and then never taking out the garbage. When the house gets too smelly, they just build a new house.”

Of course, if the CMS has already been selected, this might be a moot point, but it could at least inform specifics of how that geography should be formed.

What gets trickier is when the integrator is external and is being paid for its work. While the integrator might pursue the second option in a genuine attempt to increase flexibility, the client might view the first option as clearly better and interpret the integrator’s plan as lack of skill, or – worse – a desire to deliver a shoddy product to increase its profit margin.

Elaborated on by Stewart Brand in How Buildings Learn: What Happens After They’re Built (Penguin).

Tyson said this, but it wasn’t recorded. Accounts differ as to whether he said “in the face,” “in the nose,” or “in the mouth.” Given how hard Iron Mike punched, I doubt this distinction is really necessary.

These tools are also known as “continuous integration” servers. The idea is that new code should continually be integrated into the whole so that problems can be found early, instead of doing infrequent builds of the entire solution that don’t reveal problems until late in the process. Submitting code that doesn’t work and prevents the solution from being successfully deployed is known as “breaking the build.” This is usually a source of ostracism and open derision among a developer’s colleagues.

Note that the test and integration websites might be on the same server, making them more accurately referred to as test and integration instances.

Project managers have been known to proudly report to management that they have “Completed the installation of the CMS!” while failing to mention that this impressive-sounding milestone might have been a three-minute process.

And this is to say nothing of all the content files created and managed in production, though thankfully those have to be “brought backward” to development less frequently.

It’s a peculiar truth that some of the most lively arguments in a development project can be philosophical disagreements about where code belongs. There might be no argument about the existential validity of a particular block of code or how it works, but two developers can have almost violent disagreements about what place in the codebase is the “correct” location for that code to live.

Another way to handle this would be via repository abstraction, as discussed in APIs and Extensibility. The course repository might be “mounted” in real time as a section of the repository.

I still remember installing and configuring Ektron CMS400.Net from a hastily burned installation CD (the server initially had no network access) for hours while standing in front a physical server in a rack in some freezing data center back in the early 2000s. We’ve come a long way.

Michael Sampson has written an entire book of strategies to increase user adoption of new technologies. His User Adoption Strategies (The Michael Sampson Company) is one of the few books on the market to specialize in that subject.